Simple Steps to Improve your Site’s Crawlability and Indexability

All the search engine optimisation in the world will be for nothing if the search engines themselves can’t find your webpages.

How do you go about making that process of boosting your site’s indexability and crawlability, to make it easy for the bots to do their thing?

Most SEO strategies are built on the foundation of content and keywords, they’re vital components of a well-ranked website, but so is discoverability.

That is where crawling and indexing become just as important.

Crawlability is all about how easily those search engine bots can scan your website’s pages before indexing them.

Indexability measures how easily your webpages can be analysed and added to the search engine’s index.

Those processes need to be made easy, and fortunately it isn’t a dark art to do that, let’s take a closer look at the elements of your website that affect those twin processes of crawling and indexing, along with some ways to make sure your site is ready when the bots come calling!

Ways you can Improve Crawling & Indexing

We know they are a big deal; here are a few things you can do to make sure they are done optimally...

Boosting your page loading times

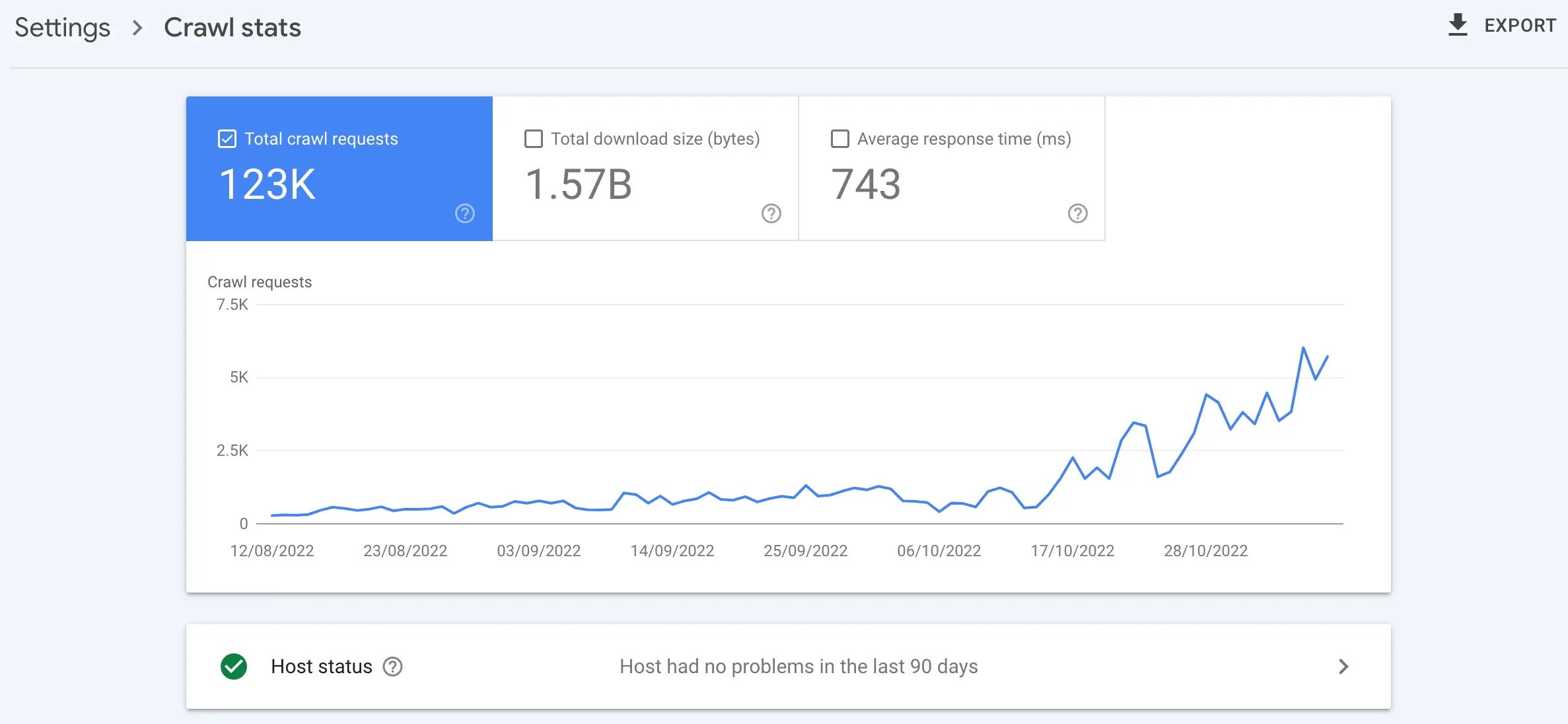

Search engine bots don’t have an infinite amount of time to crawl your webpages (after all they have billions more to catalogue.)

This ‘crawl budget’ gives them a specified time frame to crawl and index a page, and bots are not known for their patience. If they cannot do it, they’ll simply leave and the page won’t be indexed.

If you think that’s catastrophic for your SEO, you are right. You can check your site’s speeds using Google Search Console or tools such as Screaming Frog.

Quick fixes to improve loading speeds:

- Server or hosting platform upgrades

- Enabling compression

- Reducing redirects

- Minifying CSS, HTML & JavaScript

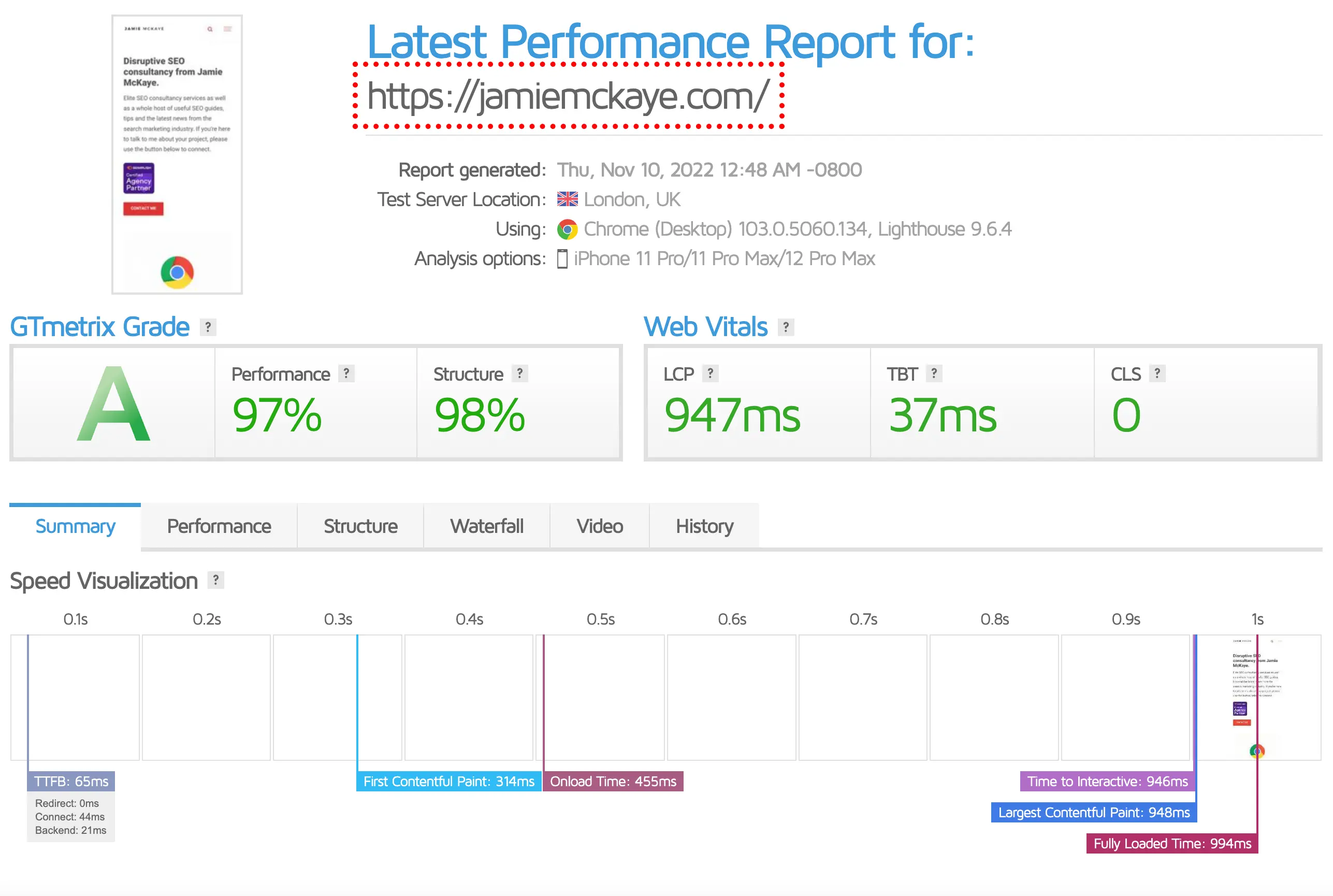

You can check your site loading times at Pingdom or GTMetrix

Submitting your Sitemap to Google

Assuming you haven’t told it not to, sooner or later Google will try to crawl your site. That’s a good thing, but it isn’t helping you climb on to page 1 or the SERPS while you are waiting.

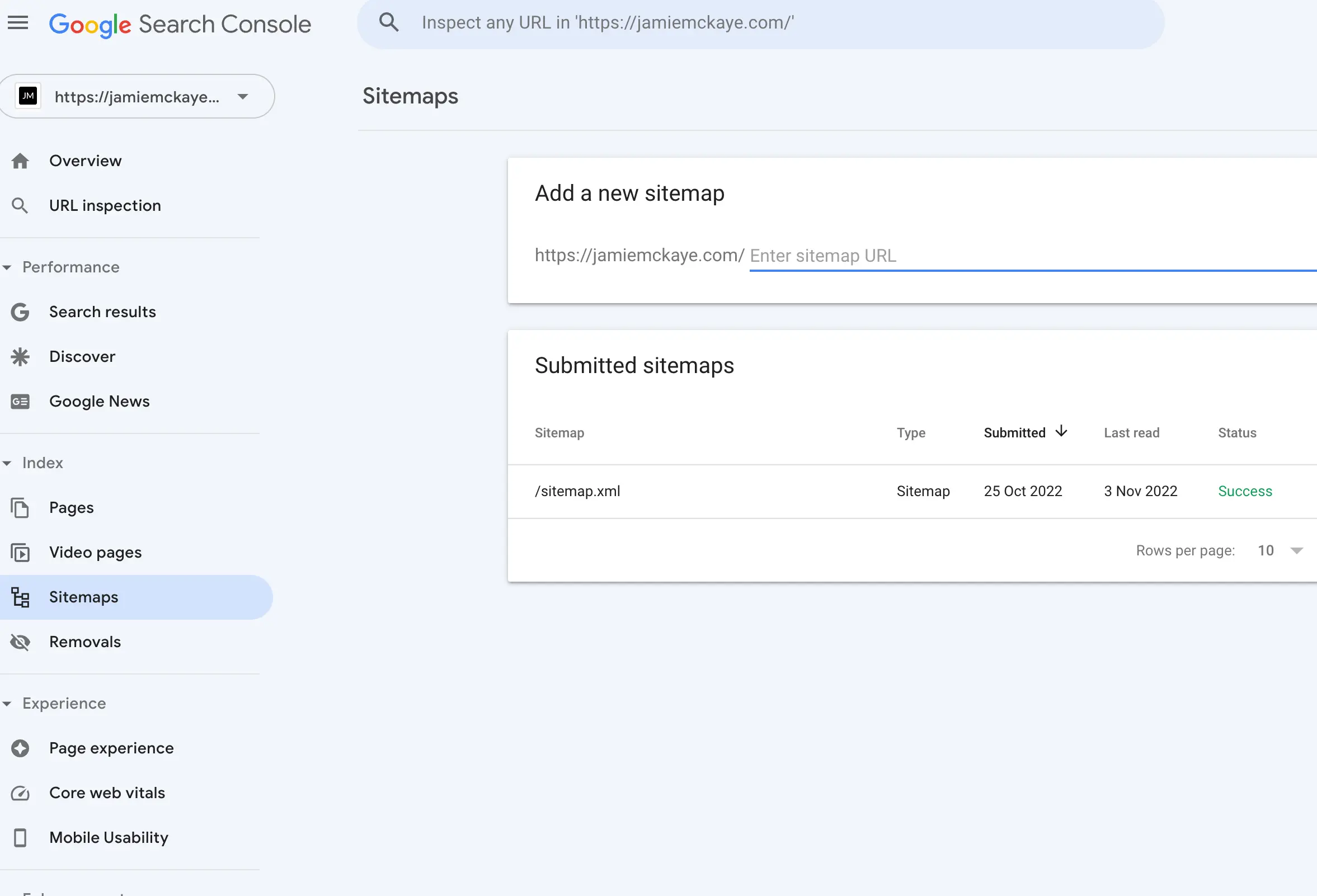

That is why it is a good idea to submit any changes in the form of a sitemap to Google Search Console.

This file that lives in your root directory serves as a roadmap for search engines. An up to date sitemap will have direct links to every page on a website, allowing sites like Google to learn about multiple pages at the same time with no need for a deep dive to discover pages hidden beneath multiple links.

If you have a site that is reliant on depth, or if you frequently add pages and content then a sitemap can make a world of difference.

Follow these steps to submit a sitemap to Google Search Console:

- Sign in to Search Console

- Select your website from the sidebar

- Click on ‘Sitemaps’ (under the Index section)

- If there are any out of date or invalid sitemaps such as sitemap.xml remove them

- Enter ‘sitemap_index.xml’ in the ‘Add a new sitemap’ field, to complete the sitemap URL

- Submit the changes

Auditing New Content

Every website publishes new pages from time to time; many more regularly update existing pages.

Making sure this new or refreshed content is being indexed is important.

You can do this using Google Search Console and doing so as part of a more comprehensive site-wide audit is always advisable, in order to spot other areas where your SEO strategy might be falling short.

Always remember, there are a range of free tools out there to help you scale the audit process, such as:

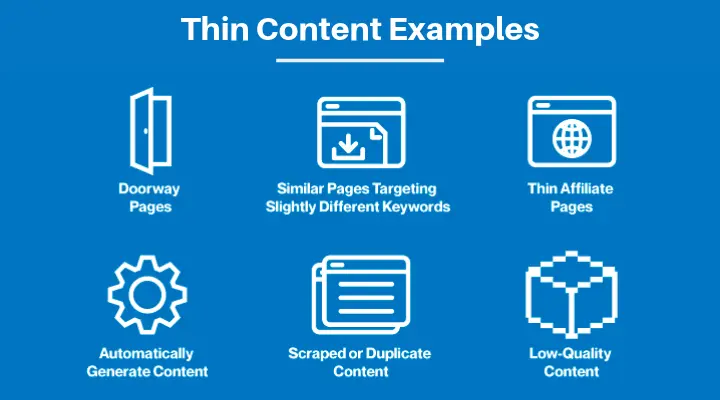

Rid your Site of Duplicate or Low-Quality Content

When Google crawls your site, if it finds content that it considers not valuable then there is a risk that it might not be indexed.

Such ‘unworthy’ content might be poorly written, content that isn’t unique to your site or content lacking the external signals that demonstrate its authority and value.

Duplicate content is another potential issue for crawlers, coding structure can confuse bots, meaning it doesn’t know which version it should index. Pagination issues, session Ids and redundant content are all known to cause issues like this.

Red flags such as these can often trigger an alert in Google Search Console, warning you that Google is encountering more URLs than it expected. If not though, it is still good SEO housekeeping to check things such as missing or duplicated tags and URL’s with extra characters that might be confusing the crawling process for search engines.

You can fix low-quality or duplication problems by doing things such as:

- Determining which pages are not being indexed and then reviewing the target queries for them

- Replacing, removing or refreshing web pages when needed

- Fixing tags

- Changing Google’s access levels

Dealing with Redirects

Websites naturally evolve over time and redirects are an essential part of that evolution process, how else can we direct a visitor to a newer or more relevant page?

However, redirects should always be used with care...

One of the more common issues is redirect chains, when a redirect leads to another before reaching (in theory) the intended destination.

Worse still is the dreaded redirect loop, wherein the initial redirect leads to another, then another and so on, until eventually linking back to the original page.

As you might imagine, Google does not see this as a positive signal!

Other common redirect issues that regularly wreak havoc with a site are:

- Redirecting to the homepage as an easy option

- Not paying attention to case sensitive URLs

- Using 302 (temporary) redirects as opposed to 301 (permanent) redirects

- Failing to keep track of the changes

Repairing Broken Links

Not only will broken links frustrate your site’s users, they will also cause havoc for your site’s crawlability.

Those things combined equal bad news for your SEO...

Finding broken links can seem like a large-scale undertaking if your site is a big one, however Google Search Console and Analytics can both report on issues such as 404 errors, pointing you in the right direction to pinpoint problems.

Broken links can be caused by a number of things:

- Entering an incorrect URL when the link is created

- Removal of a page that was linked to

- Changing the URL of a page

- Updates to site structure

- Malfunctioning plugins and other broken elements within a page

As you can see, a good understanding of your website’s crawlability and indexability is more than just useful. These two factors combined can have a real impact on search rankings. This makes it vital to regularly audit your site for any issues that could be leading confusing or misleading search engines.

Be sure to checkout my full SEO guide for information on all things SEO.

Comments